Embeddings

1. Objective

Text similarity tasks involve calculating semantic similarity between pairs of sentences. For this task, the All-Mpnet-Base-V2-Embedding model is recommended, which is optimized for generating embeddings that can be used for computing similarity between texts.

Dataset Preparation

Prepare your dataset in the appropriate format for the embedding task. The dataset should be prepared in CSV format with three columns: "text1", "text2" and "score"

- text1: The first piece of text input.

- text2: The second piece of text input

- score: A numerical value (e.g., similarity score, relevance score ) indicating the relationship between text1 and text2. Typically, this would be a float value such as a cosine similarity score or a label reflecting their semantic similarity and value would lie between 0 and 1

"text1", "text2", "score"

"The Eiffel Tower is in Paris, France", "The Statue of Liberty is located in New York", 0.23

"Apple is a technology company","Orange is a fruit", 0.05

"Machine learning models can improve", "Machine learning models can improve", 0.85

2. Finetuning Preparation

Please refer to the in-depth guide on Finetuning on Emissary here - Quickstart Guide.

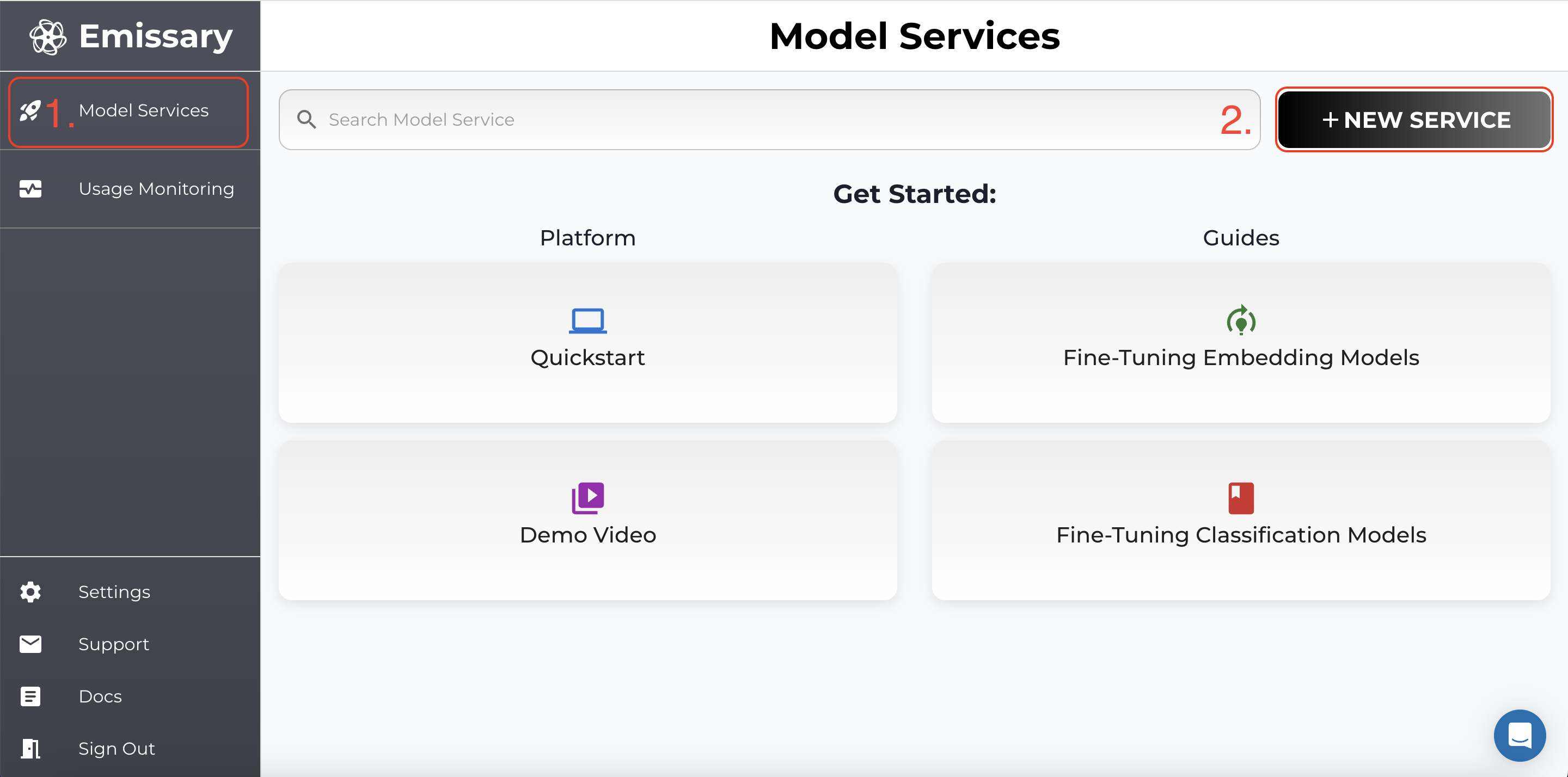

Create Model Service

Navigate to Dashboard arriving at Model Services, the default page on the Emissary platform.

- Click + NEW SERVICE in the dashboard.

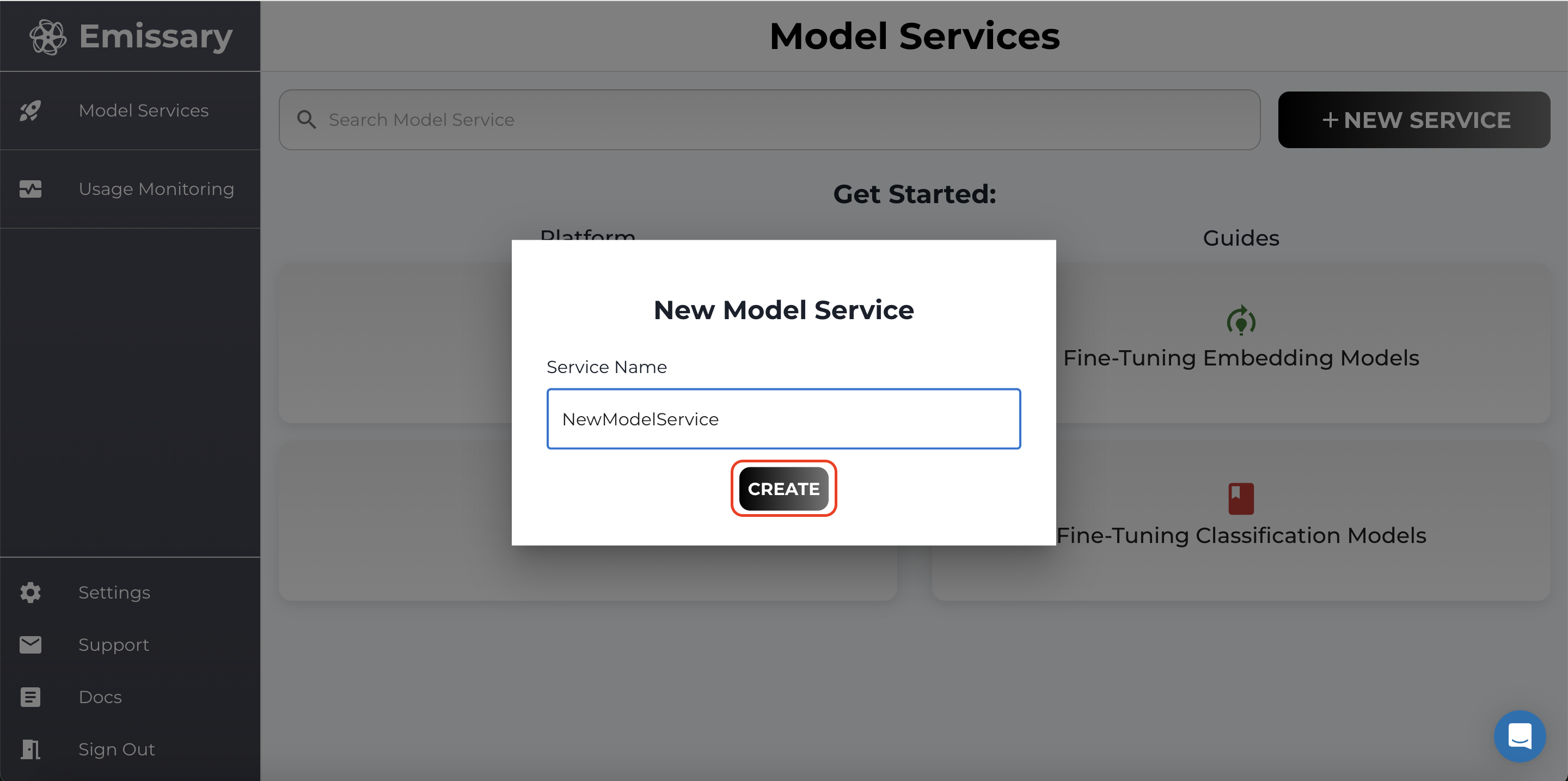

- In the pop-up, enter a new model service name, and click CREATE.

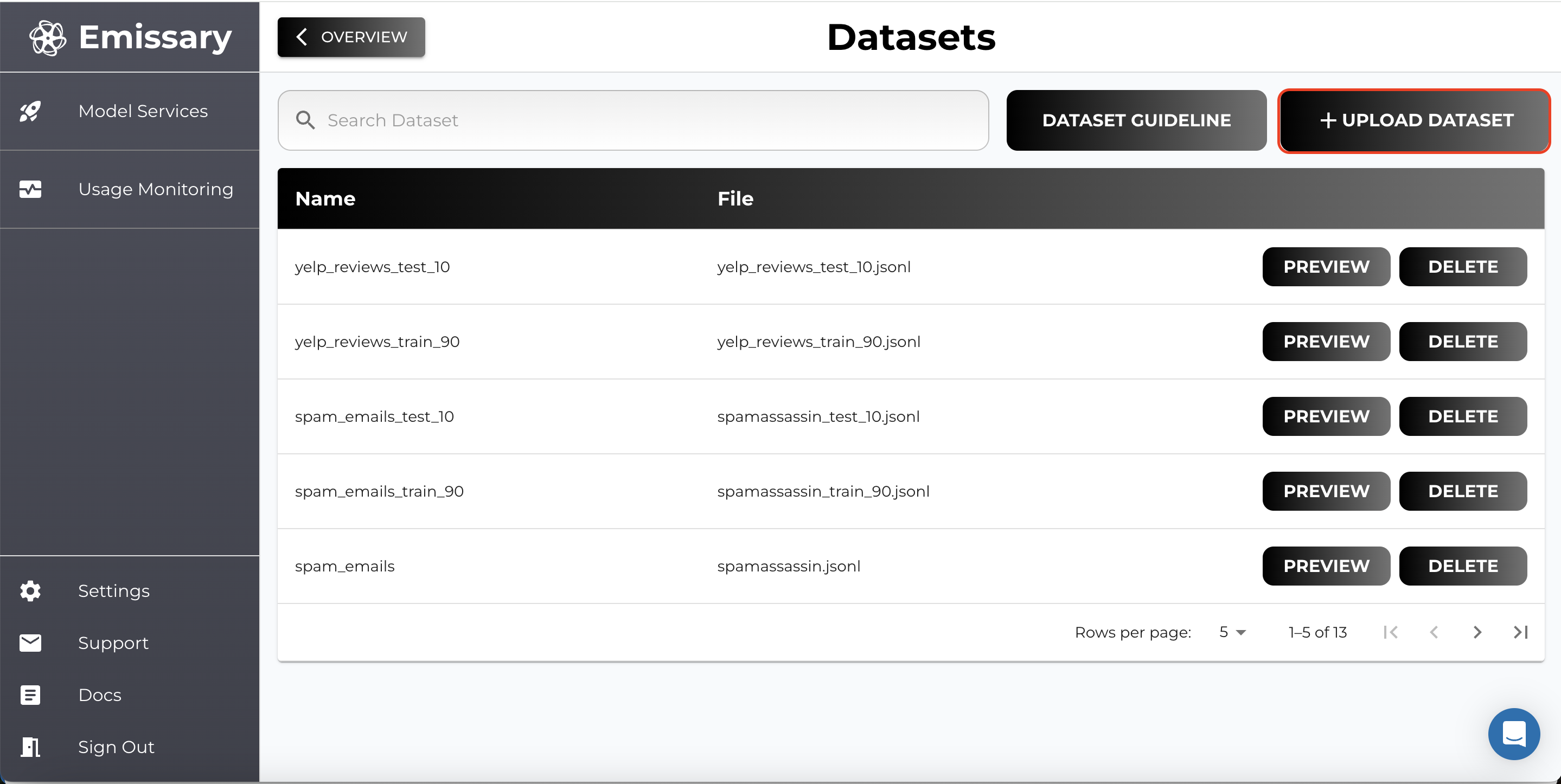

Uploading Datasets

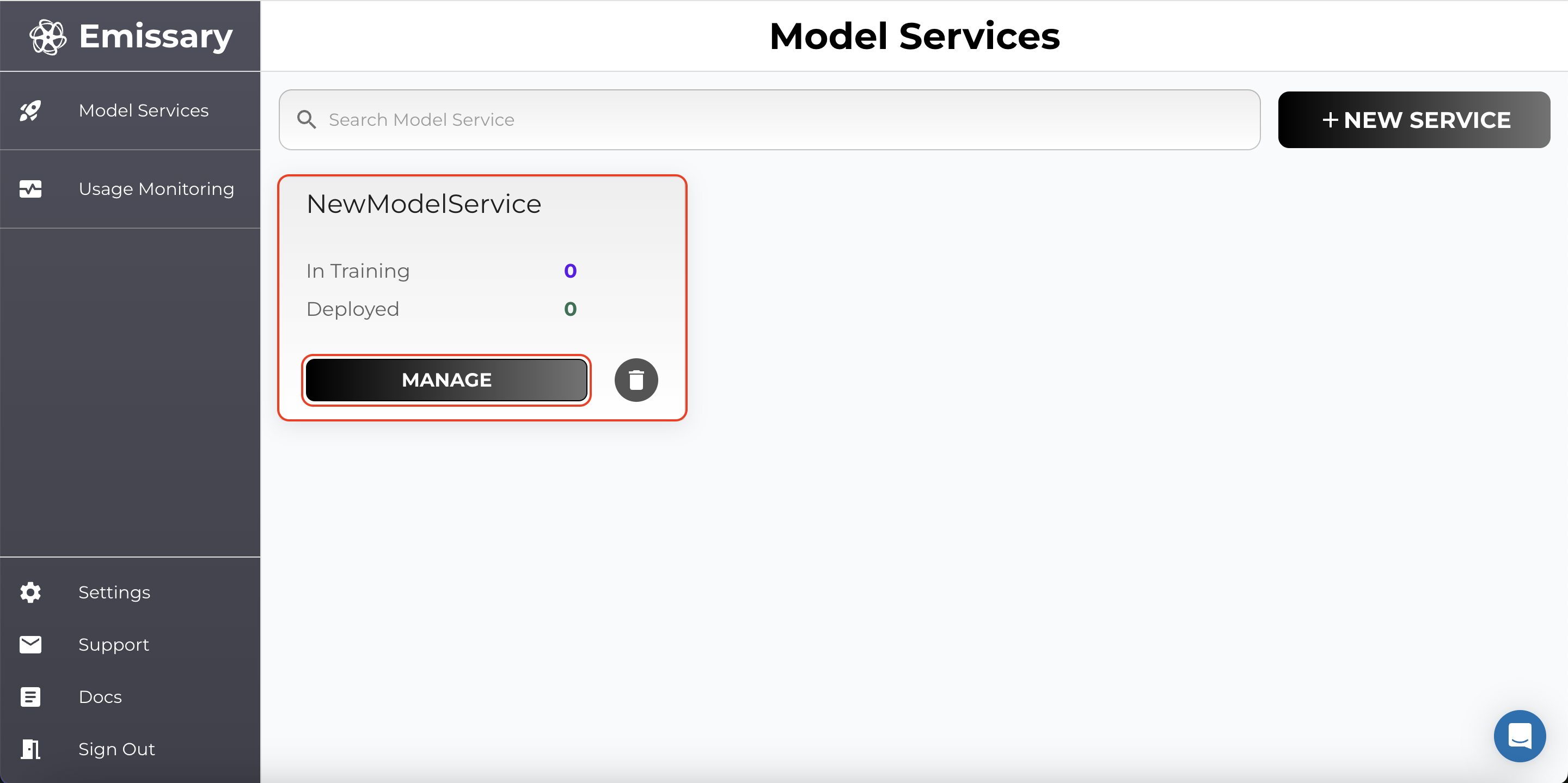

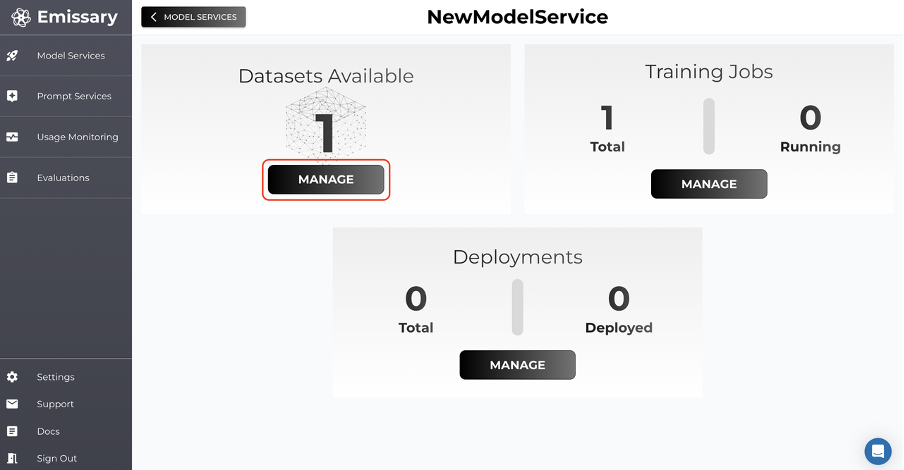

A tile is created for your task. Click Manage to enter the task workspace.

-

Click MANAGE in the Datasets Available tile.

-

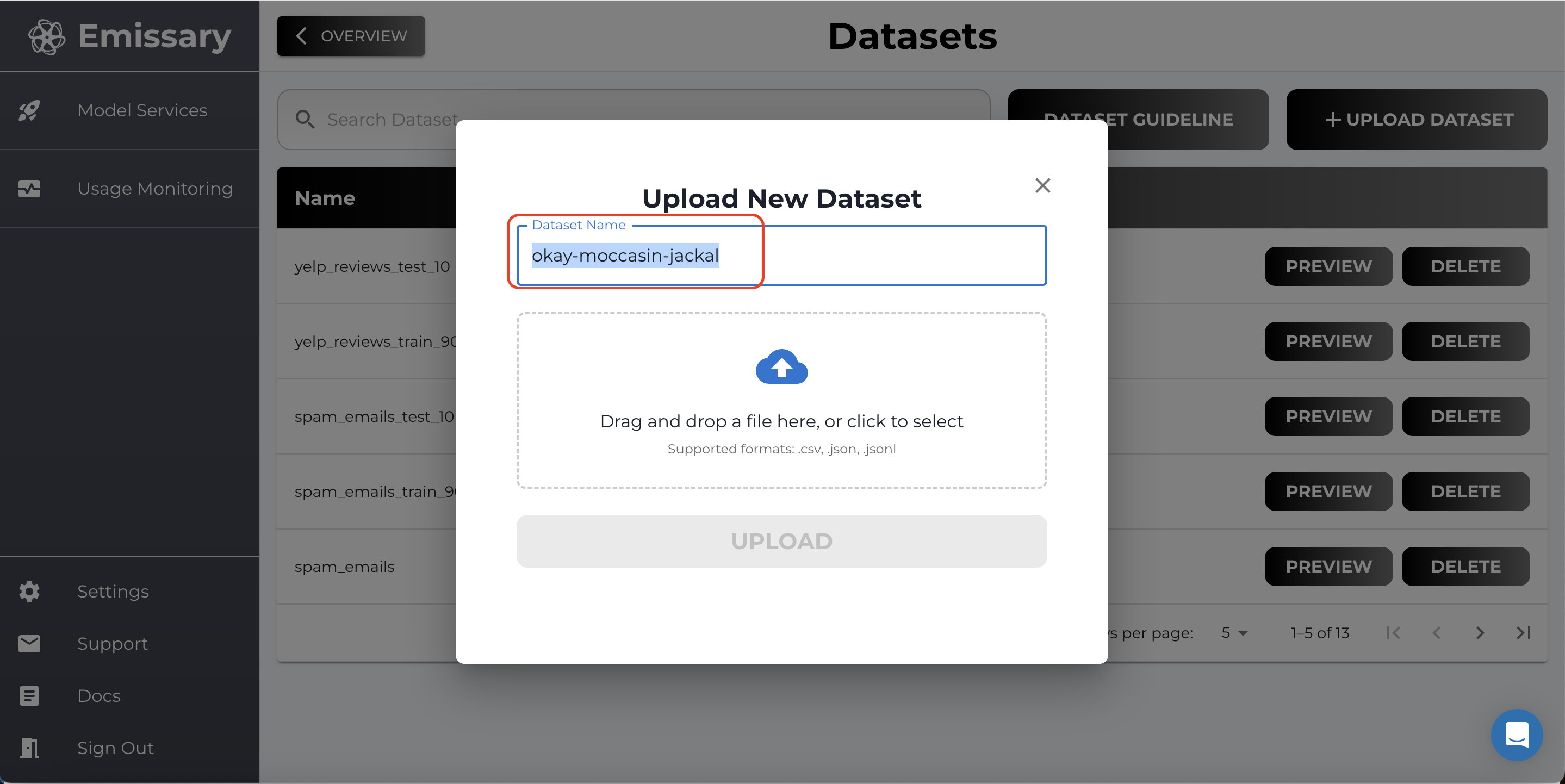

Click on + UPLOAD DATASET and select training and test datasets.

-

Name datasets clearly to distinguish between training and test data (e.g., embedding_data_train, embedding_data_test).

Important Note: For LLM models, it is recommended to use JSON Lines (.jsonl) format.

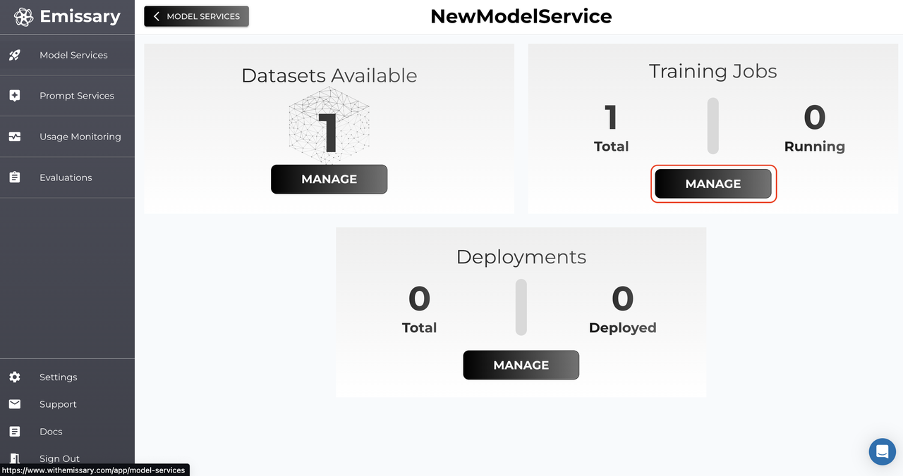

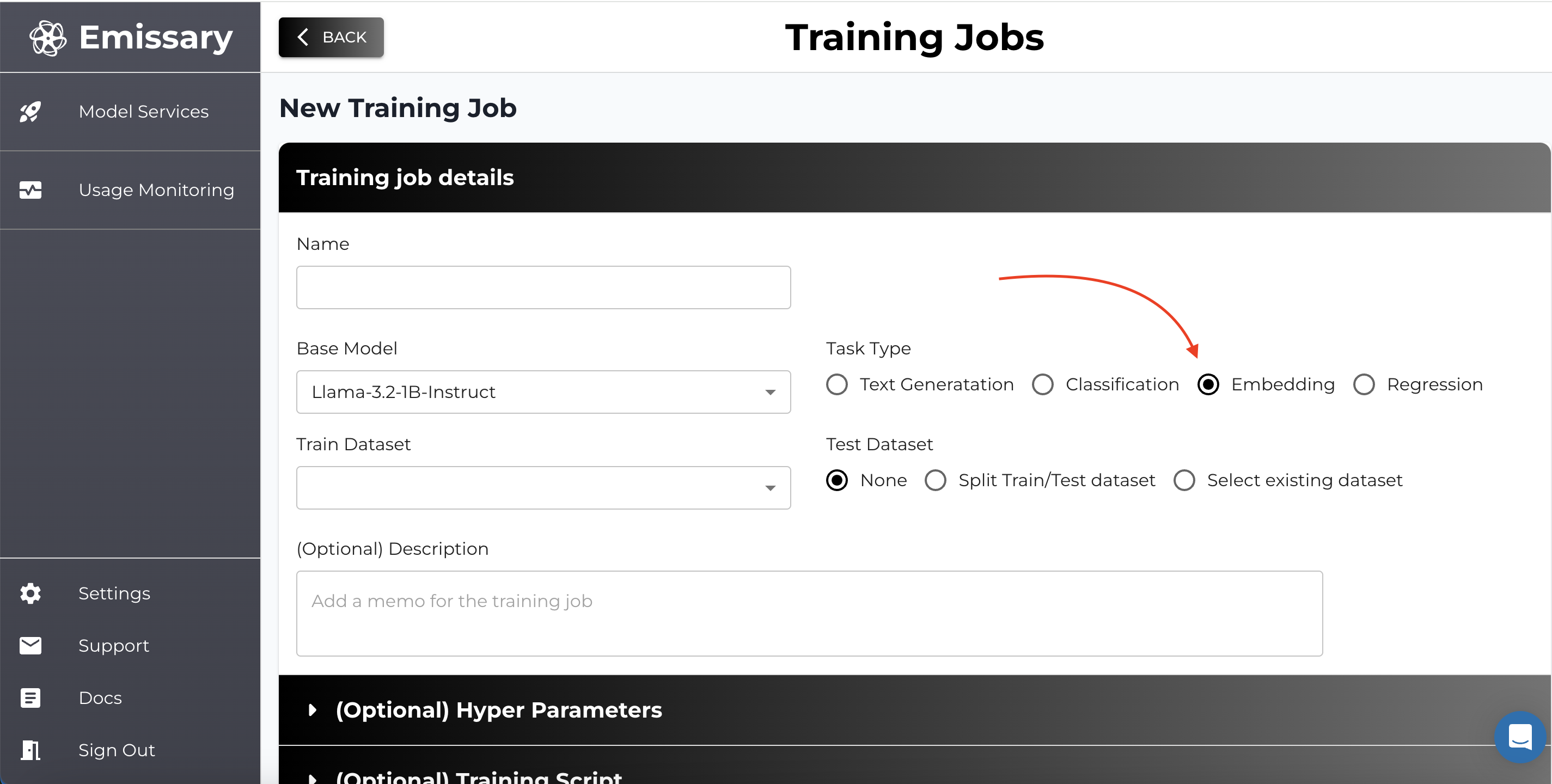

3. Model Finetuning

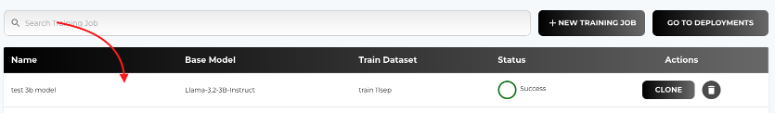

Now, go back one panel by clicking OVERVIEW and then click MANAGE in the Training Jobs tile.

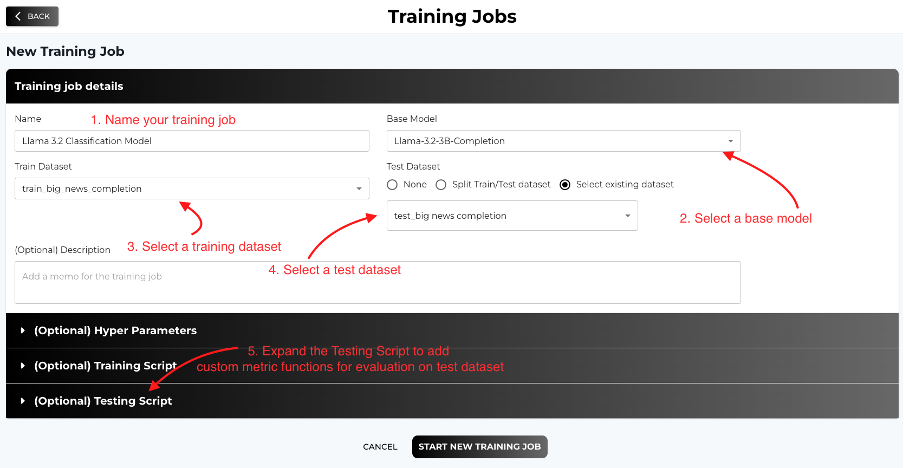

Here, we’ll kick off finetunes. The shortest path to finetuning a model is by clicking +NEW TRAINING JOB, naming the output model, picking a backbone (base model), selecting the training dataset (you must have uploaded it in the step before), and finally hitting START NEW TRAINING JOB.

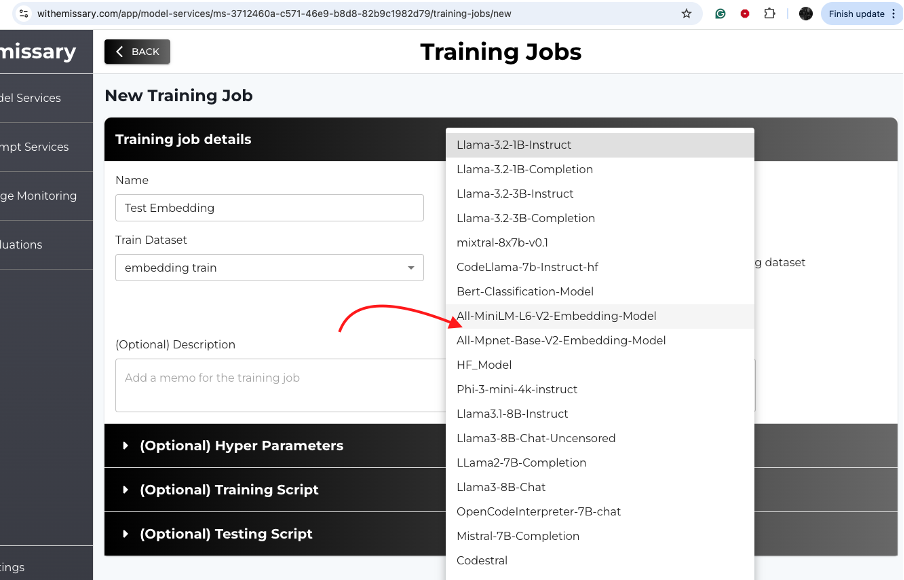

Selecting Base Model

-

For Embeddings and Text Similarity related tasks, we recommend using the All-Mpnet-Base-V2-Embedding model.

-

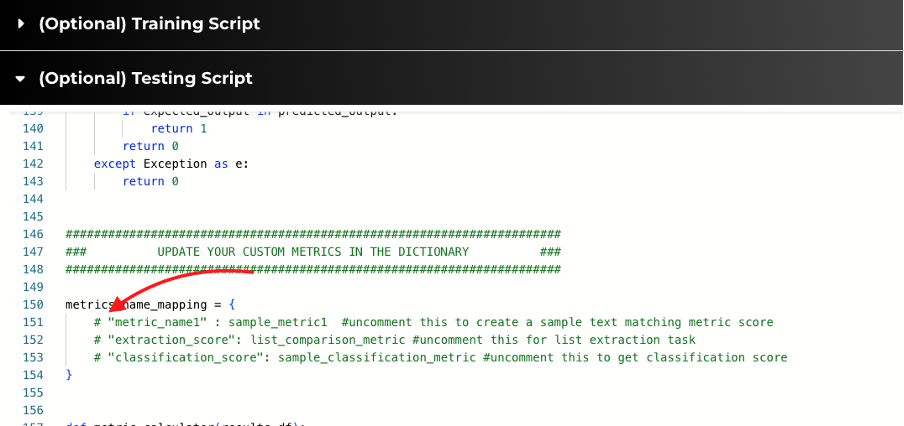

A custom function that generates a sample text matching metric score has been provided. Uncomment metric_name1 to use. You could also add your own custom metric functions similarly.

Training Parameter Configuration

Please refer to the in-depth guide on configuring training parameters here - Finetuning Parameter Guide.

4. Model Monitoring & Evaluation

Using Test Datasets

Including a test dataset allows you to evaluate the model's performance during training.

- Per Epoch Evaluation: The platform evaluates the model at each epoch using the test dataset.

- Metrics and Outputs: View evaluation metrics and generated outputs for test samples.

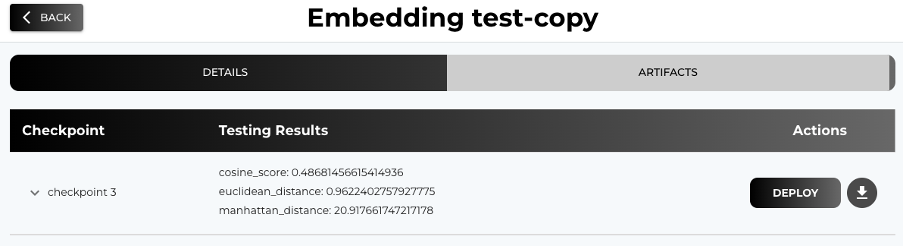

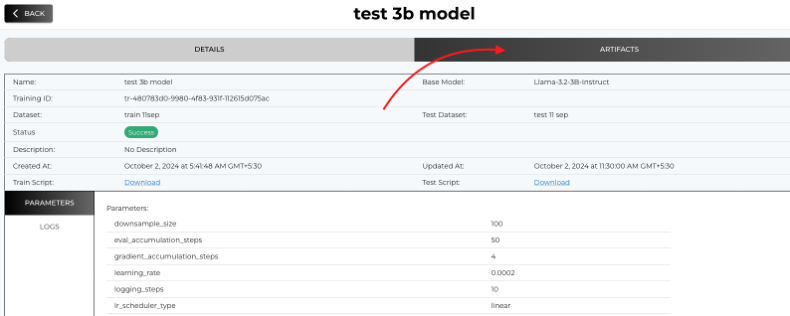

- Post-completion of training: Check results in Training Job --> Artifacts

Evaluation Metric Interpretation

- Accuracy: Indicates the percentage of correct predictions.

- F1 Score: Balances precision and recall; useful for imbalanced datasets.

- Custom Metrics: Define custom metrics in the testing script to suit your evaluation needs.

5. Deployment

Refer to the in-depth walkthrough on deploying a model on Emissary here - Deployment Guide. Deploying your models allows you to serve them and integrate them into your applications.

Finetuned Model Deployment

- Navigate to the Training Jobs Page. From the list of the finetuning jobs, select the one you want to deploy.

- Go to the ARTIFACTS tab.

- Select a Checkpoint to Deploy.

6. Best Practices

- Start Small: Begin with a smaller dataset to validate your setup.

- Monitor Training: Keep an eye on training logs and metrics.

- Iterative Testing: Use the test dataset to iteratively improve your model.

- Data Format: Use the recommended data formats for your chosen model to ensure compatibility and optimal performance.