Data Extraction - Guide

Objective

The following is a guide on finetuning a model for data extraction tasks using the Llama-3.2-3B-Completion model, which excels at understanding natural language and extracting structured data from unstructured text.

Finetuning Preparation

Please refer to the in-depth guide on Finetuning on Emissary here - Quickstart Guide.

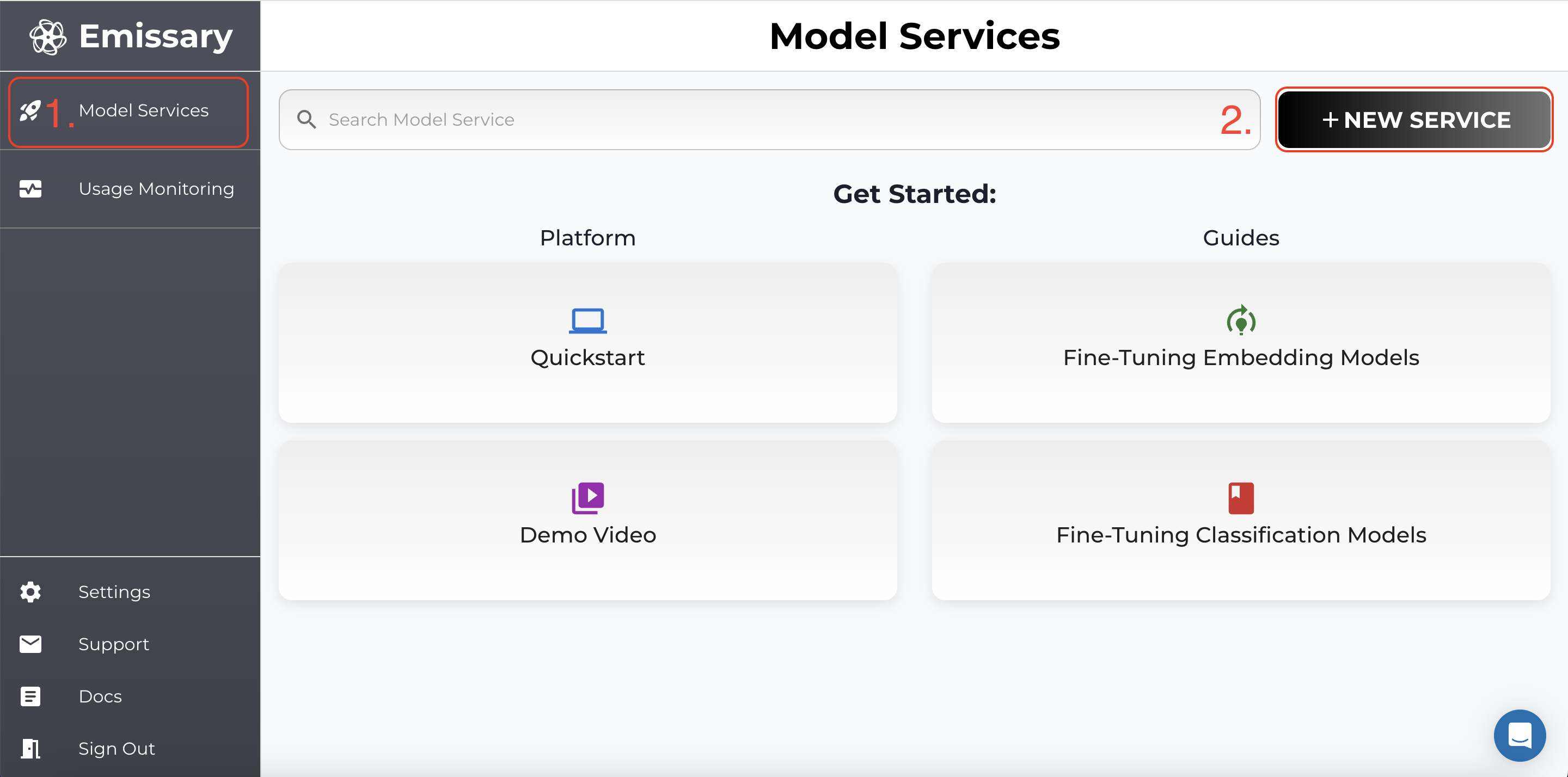

Create Model Service

Navigate to Dashboard arriving at Model Services, the default page on the Emissary platform.

- Click + NEW SERVICE in the dashboard.

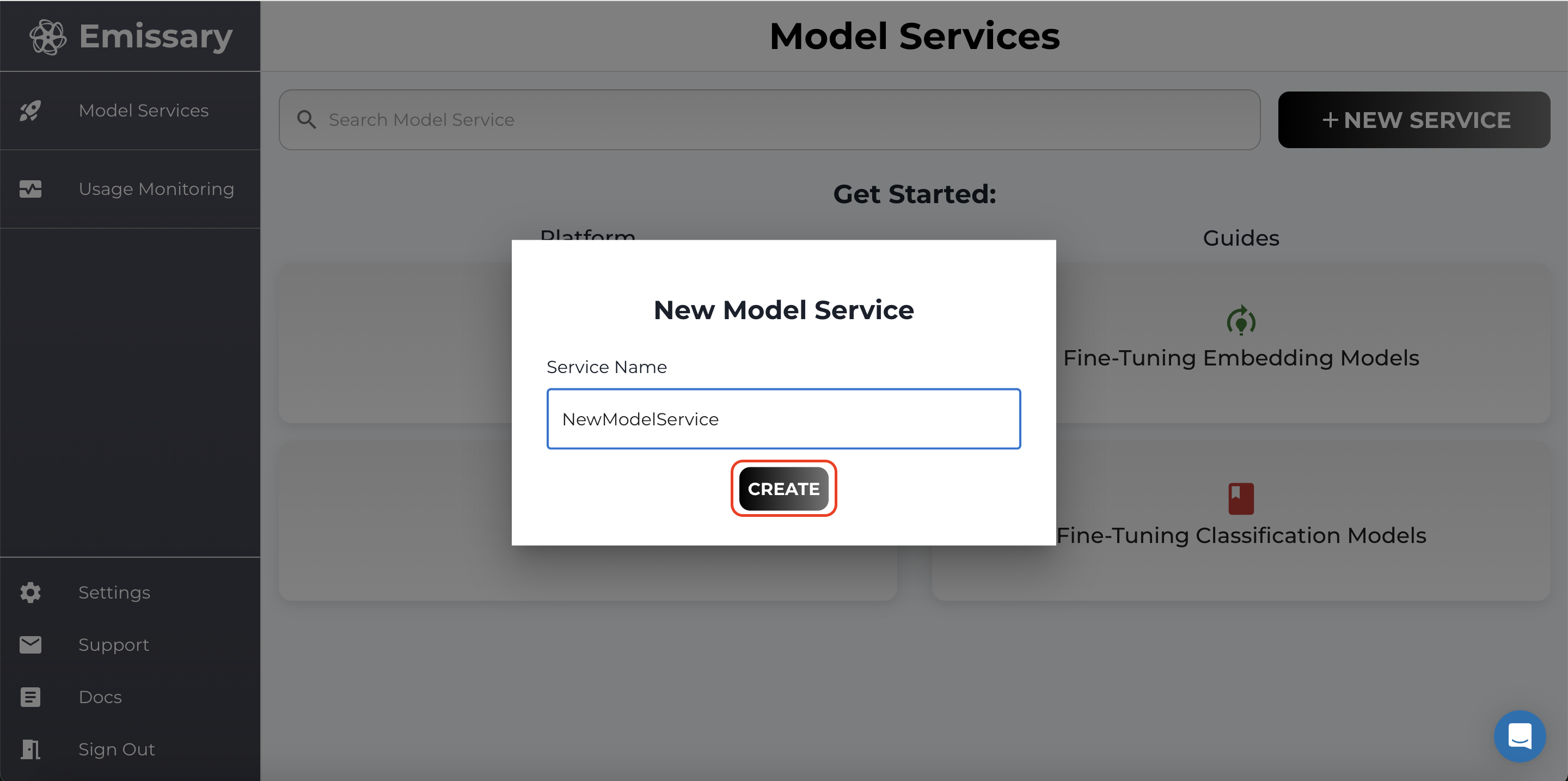

- In the pop-up, enter a new model service name, and click CREATE.

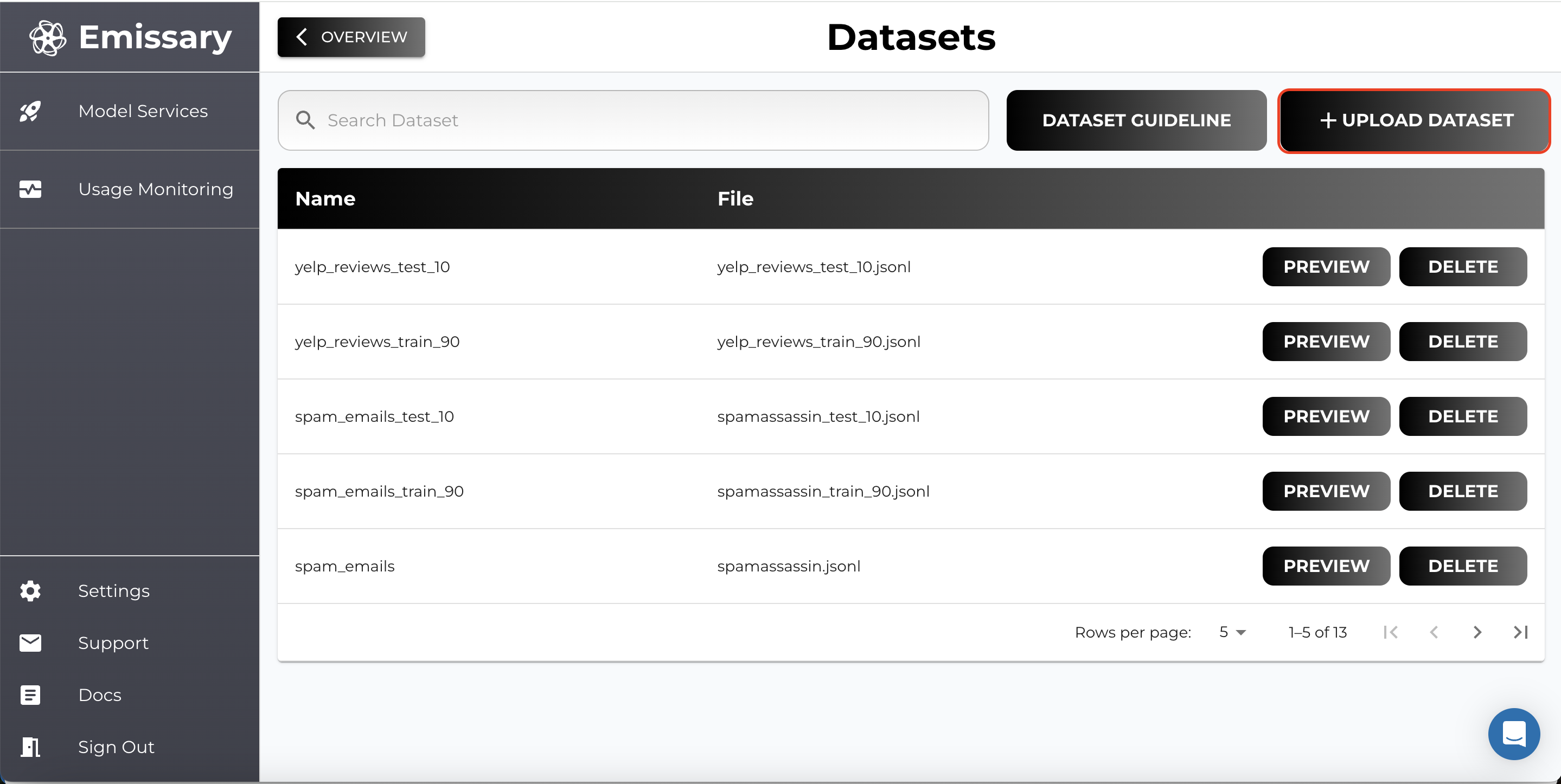

Uploading Datasets

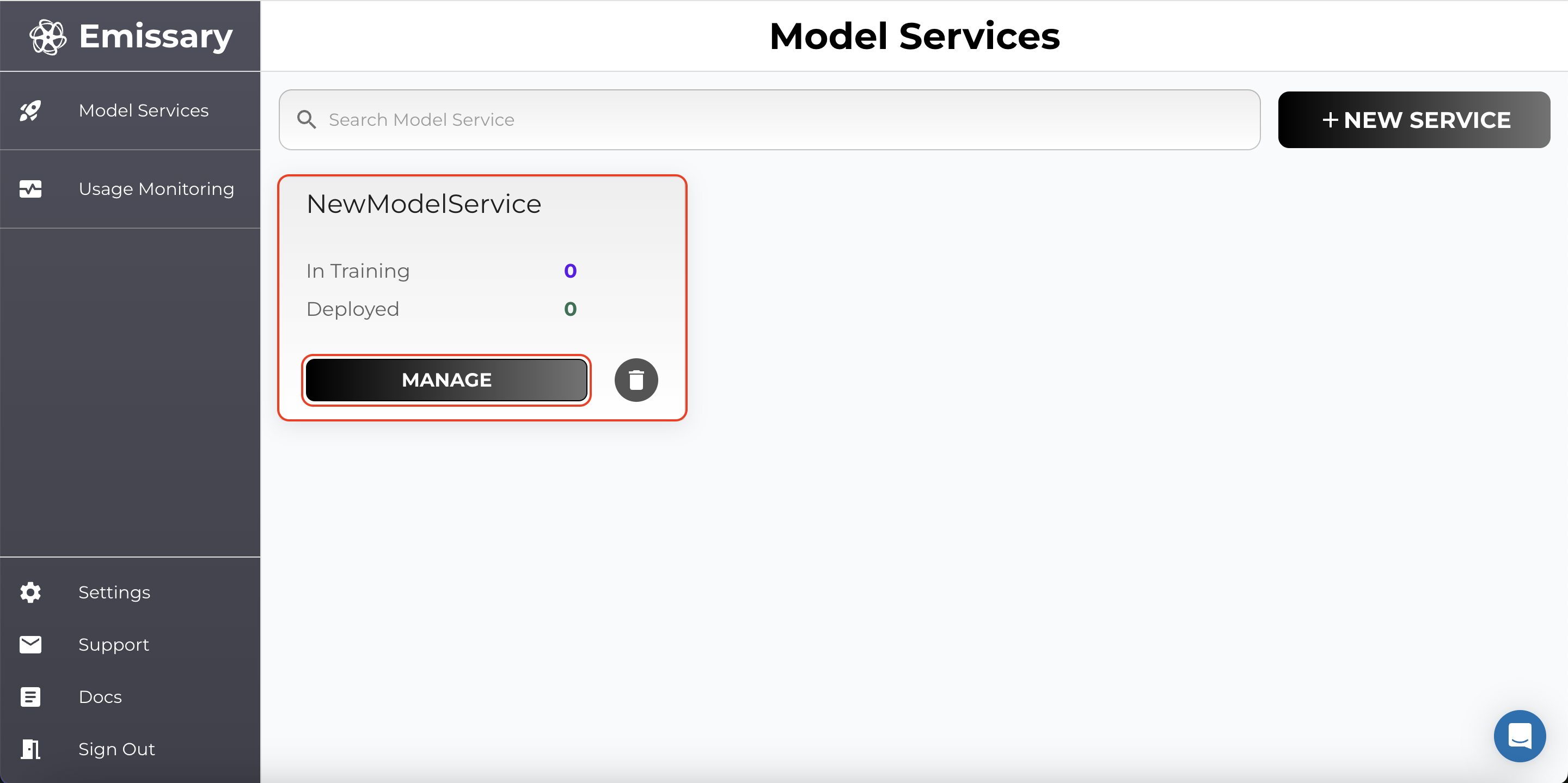

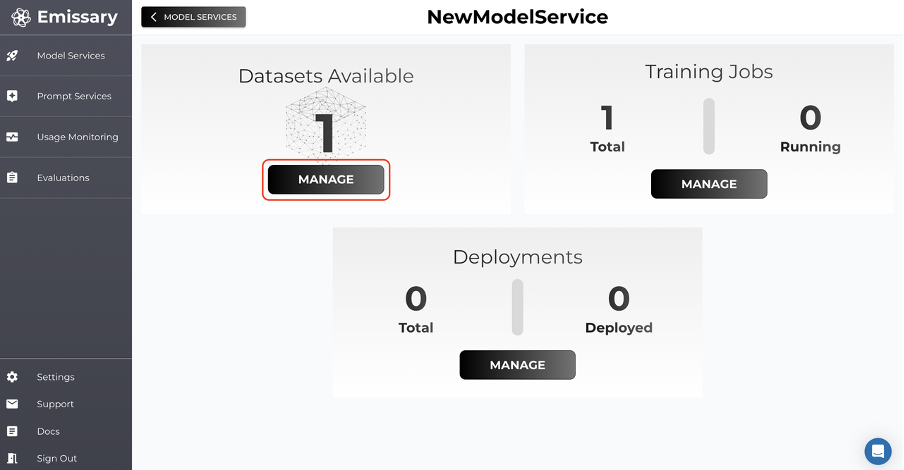

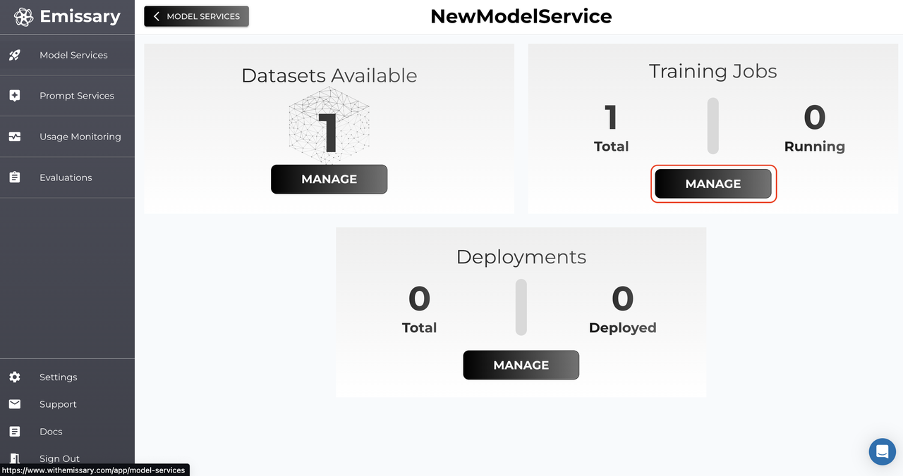

A tile is created for your task. Click Manage to enter the task workspace.

- Click MANAGE in the Datasets Available tile.

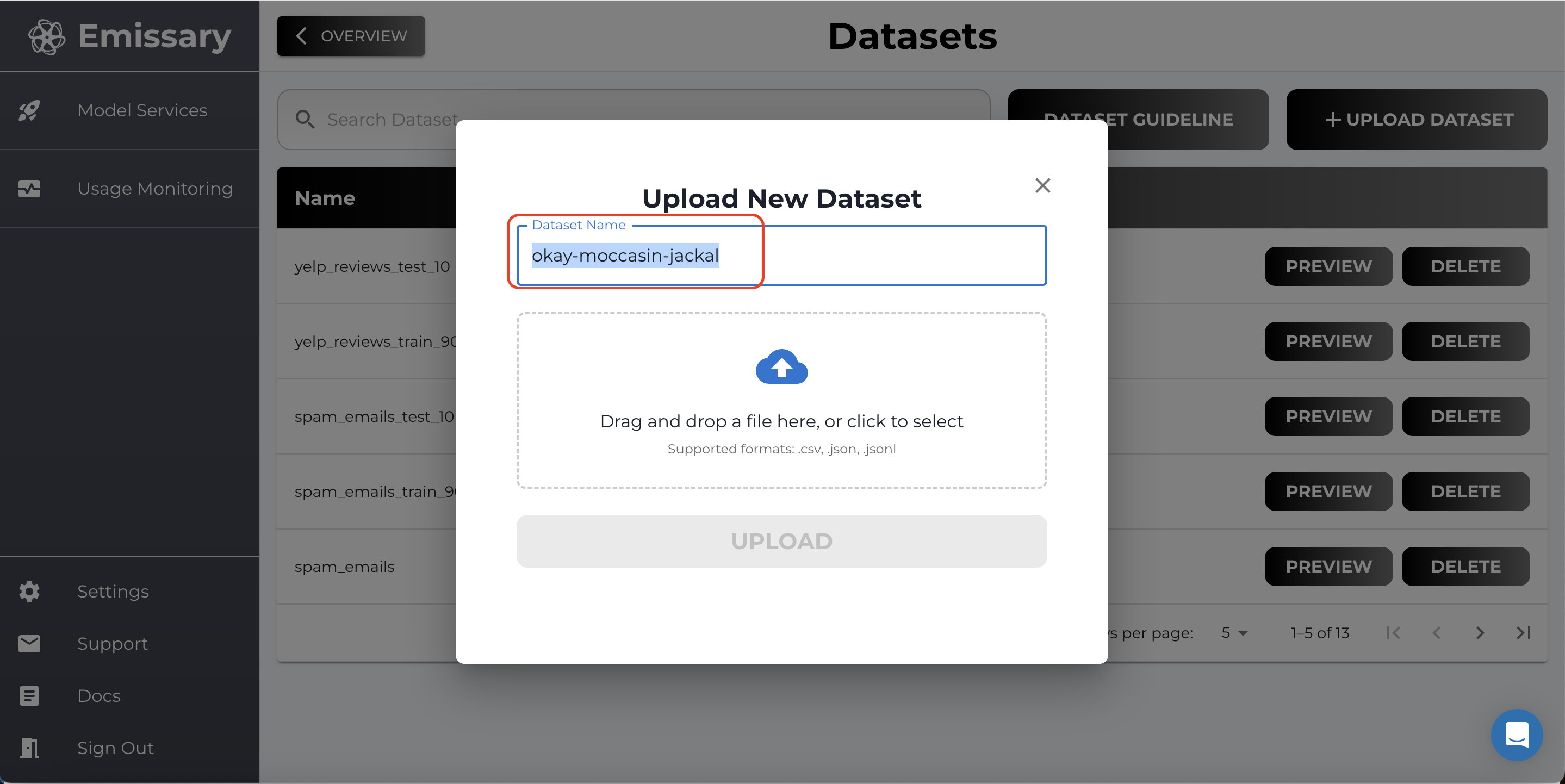

- Click on + UPLOAD DATASET and select training and test datasets.

- Name datasets clearly to distinguish between training and test data (e.g., extraction_train_jsonl, extraction_test_jsonl).

Important Note: For LLM models, it is recommended to use JSON Lines (.jsonl) format.

Model Finetuning

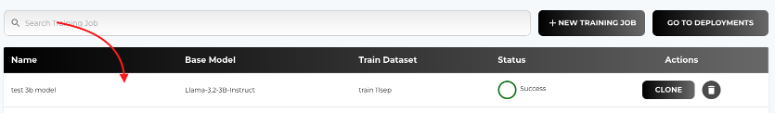

Now, go back one panel by clicking OVERVIEW and then click MANAGE in the Training Jobs tile.

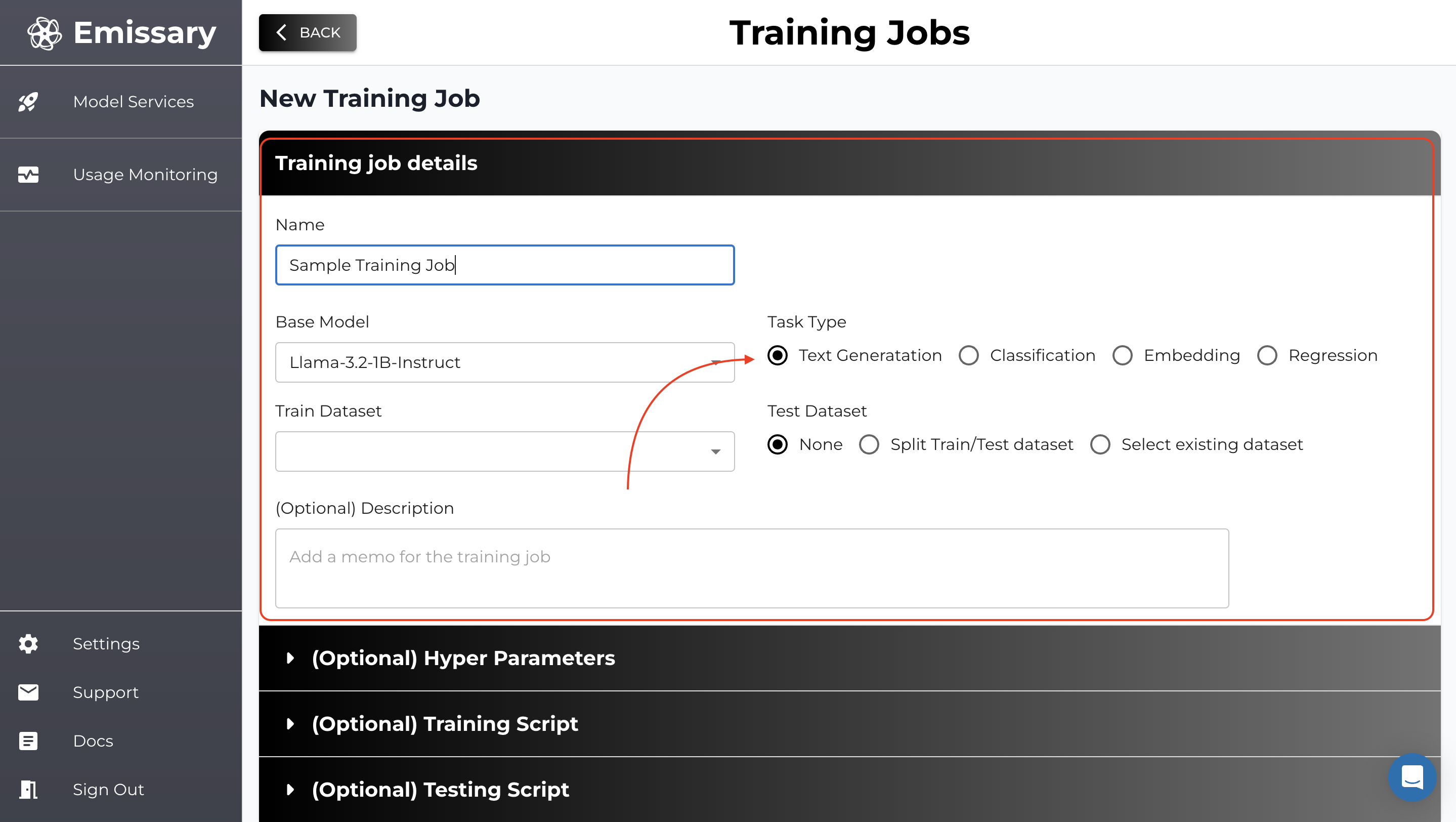

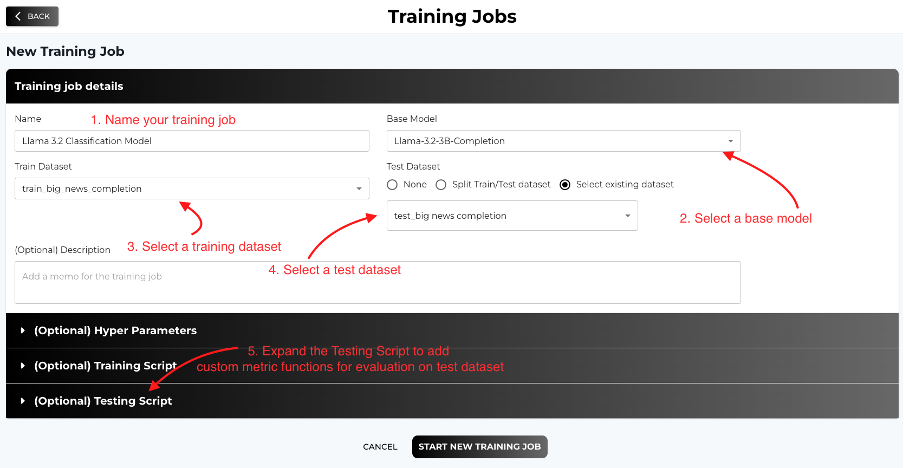

Here, we’ll kick off finetuning. The shortest path to finetuning a model is by clicking +NEW TRAINING JOB, naming the output model, picking a backbone (base model), selecting the training dataset (you must have uploaded it in the step before), and finally hitting START NEW TRAINING JOB.

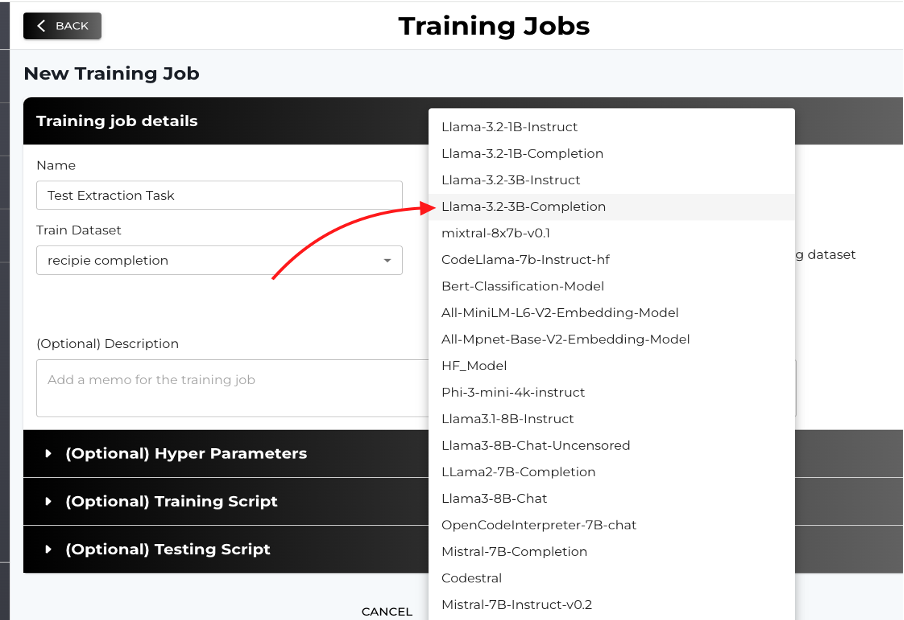

Selecting Base Model

-

For Data Extraction, the Llama 3.2-3B-Completion model is recommended.

-

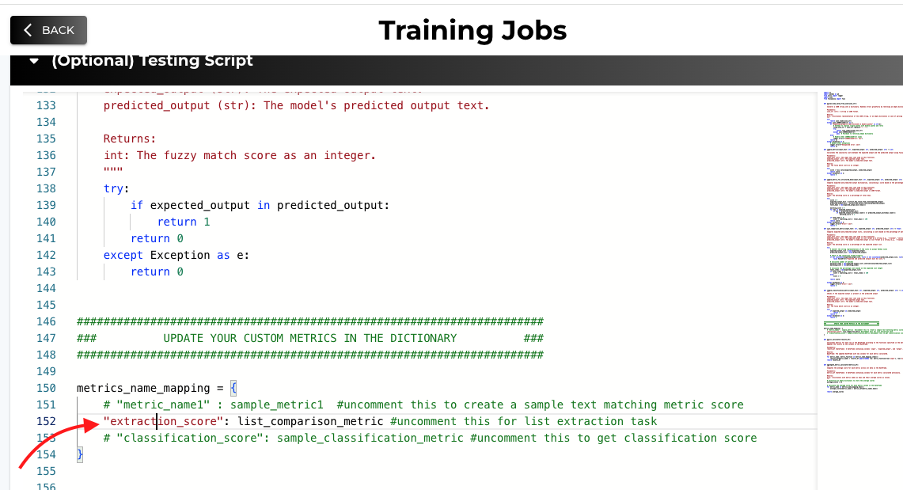

A custom function that compares two lists and gives a matching extraction score has been provided. Uncomment extraction score to use.

Training Parameter Configuration

Please refer to the in-depth guide on configuring training parameters here - Finetuning Parameter Guide.

Model Monitoring & Evaluation

Using Test Datasets

Including a test dataset allows you to evaluate the model's performance during training.

- Per Epoch Evaluation: The platform evaluates the model at each epoch using the test dataset.

- Metrics and Outputs: View evaluation metrics and generated outputs for test samples.

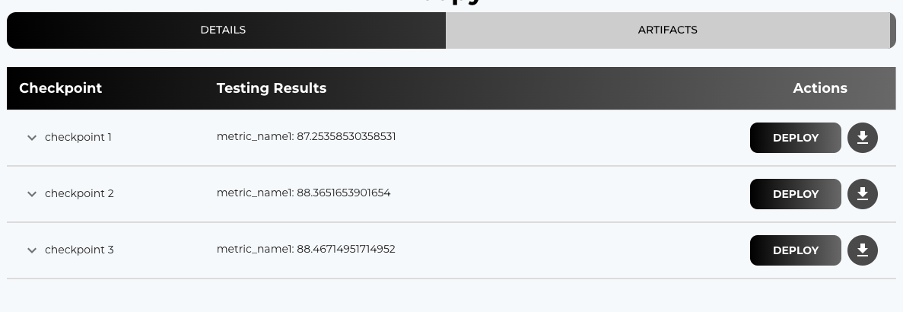

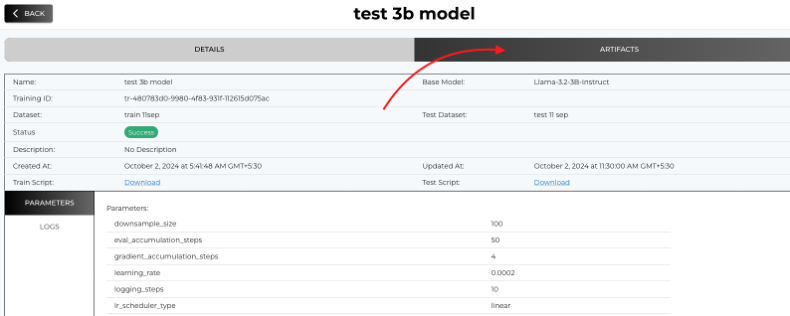

- Post completion of training, check results in Training Job --> Artifacts.

Evaluation Metric Interpretation

- Accuracy: Indicates the percentage of correct predictions.

- F1 Score: Balances precision and recall; useful for imbalanced datasets.

- Custom Metrics: Define custom metrics in the testing script to suit your evaluation needs.

Deployment

Refer to the in-depth walkthrough on deploying a model on Emissary here - Deployment Guide.

Deploying your models allows you to serve them and integrate them into your applications.

Finetuned Model Deployment

- Navigate to the Training Jobs Page. From the list of the finetuning jobs, select the one you want to deploy.

- Go to the ARTIFACTS tab.

- Select a Checkpoint to Deploy.

Parameter Recalibration

Adjust parameters like do_sample, temperature, max_new_tokens, etc. to finetune the model's response behavior.

- do_sample: Enable sampling for more varied outputs.

- temperature: Increase for more creativity, decrease for more deterministic responses.

- max_new_tokens: Limit the length of generated responses.

- top_p and top_k: Control the diversity of the output.

Best Practices

- Start Small: Begin with a smaller dataset to validate your setup.

- Monitor Training: Keep an eye on training logs and metrics.

- Iterative Testing: Use the test dataset to iteratively improve your model.

- Data Format: Use the recommended data formats for your chosen model to ensure compatibility and optimal performance.